Introduction

Today we’re going to talk about bringing machine learning to your iOS apps. This is a topic that was really big in WWDC 2017, which was a little bit unexpected – I thought there would just be a couple updates, but I’m sure you’ve been hearing about machine learning already a lot this week.

I’m an iOS developer at SoundCloud in Berlin and I have a background in math and CS. I studied a little bit of machine learning in college but nothing too substantial that was practical, so I had to relearn pretty much everything when I got back into ML recently.

Agenda

- Why and when to use ML in your apps: A crash course in machine learning foundations, just so everyone’s on the same page about some of the terminology.

- We’ll go through the Apple-provided APIs. This has been updated with the WWDC 2017 APIs.

- The workflow that you would typically use as a developer using these APIs.

- Creating a custom machine learning model using Keras which is an open source tool based on Python.

- We’ll discuss tools for further learning since this is such a big topic, so you can pursue Machine Learning further on your own.

Machine Learning, Defined

So what is machine learning?

Probably all of you know what it is at this point, but just in case, in a nutshell it’s enabling machines to learn like babies. Instead of explicitly telling the machine what you want it to do, the machine should infer this over time based on different ways of modeling. Arthur Samuel, who was an AI pioneer, defined it as the field of study that gives computers the ability to learn without being explicitly programmed.

A very simple machine learning problem would be image classification. So this is a picture of the SoundCloud office dog, and if we pass a picture of this dog to a machine learning model we would expect the machine learning model to classify it as a dog, not a cat or a turtle or something. Other common examples are face detection, which is done in the photos app that’s on your iPhone by default, and another example is music recommendations, or basically any type of recommendation system. Handwriting detection is a little bit more difficult but we can use machine learning to recognize this.

Apple uses some of these things internally in Siri, camera, and quick type: image recognition, face detection in a photo, or creating a radio station based on users’ music tastes in SoundCloud. There are so many other examples of machine learning problems but these are just a basic start.

Why Use Machine Learning

Machine learning enables a more meaningful experience. This is one of the main reasons why you would want to use it. Personalization, identification, and extracting meaning can only help your app. Users often have a harder time describing what they want versus knowing it when they see it. Maybe they don’t have the vocabulary to describe a really fancy bicycle, but they know when they see it that it’s a racing bicycle and it has certain parameters to it. Recalling is harder than recognizing.

Using Apple’s machine learning APIs that we’ll go through later, we’re going to be able to do inference on the device. You’re going to already have to have a pre-trained model and then you’re going to use that model to predict outputs given some inputs.

But why would we want to actually do this on the device rather than doing it in the cloud, using for example some of the out-of-the-box solutions from Amazon or Microsoft? Pretty much every big company has some sort of cloud service that’s a machine learning as a service offering. Why would we want to actually have the model and put it on the device and keep it there?

Apple went through it in the Platform State of the Union as well as some of the machine learning sessions if you’ve gone to any of them or watched them. Some of the key reasons why you’d want to do this also play to the strengths of Apple’s offerings in general, especially data privacy. If you have the machine learning model on the device, then it stays there and you can be sure that the user’s data is kept there.

The second reason is free computing power. If you’re going to be using one of the cloud services such as the one that Amazon provides, you’re going to have to pay for that, whereas if you’re doing inference from the device you can utilize the free computing power. It will always be available. It’s not going to be using the network once you already have the model, so even when the device is offline you can be sure that it will be available because it doesn’t use the network.

Finally, Apple’s machine learning is optimized for on-device performance. Apple has done a really good job, and they showed some data around this, for utilizing either the CPU or the GPU depending on what type of model it is and what you’re trying to do. We can take advantage of the massive GPU gains that have happened in the recent years.

Last but not least, it minimizes the memory footprint and the power consumption. Apple has optimized these things and this used to be an issue if you were trying to do inference on the device but now with the new APIs they’ve done a lot of optimizations around this, and it’s practical to use at this point.

Adding Machine Learning to your App

If you wanted to add machine learning to your app, you probably want to come up with a reason why you’re going to do this and approach it scientifically.

I would suggest identifying a key performance indicator of your app. For example, in SoundCloud, if we were to add some of these machine learning libraries, perhaps our KPI would be the listening time that an average user spends on a daily basis, and we would formulate the hypothesis about how machine learning will impact that KPI, conduct the experiment scientifically, and measure performance before and after. We should verify that it’s actually doing something, and if it’s not then regroup and try a different machine learning technique or do something else.

ML Fundamentals Crash Course

What is a model? A model is just a system that outputs a prediction, given input. It’s highly mathematical so this is a very big abstraction, but you don’t really have to worry about the low level details if you’re just using Apple’s APIs. That’s the beauty in it.

Neural Network Basics

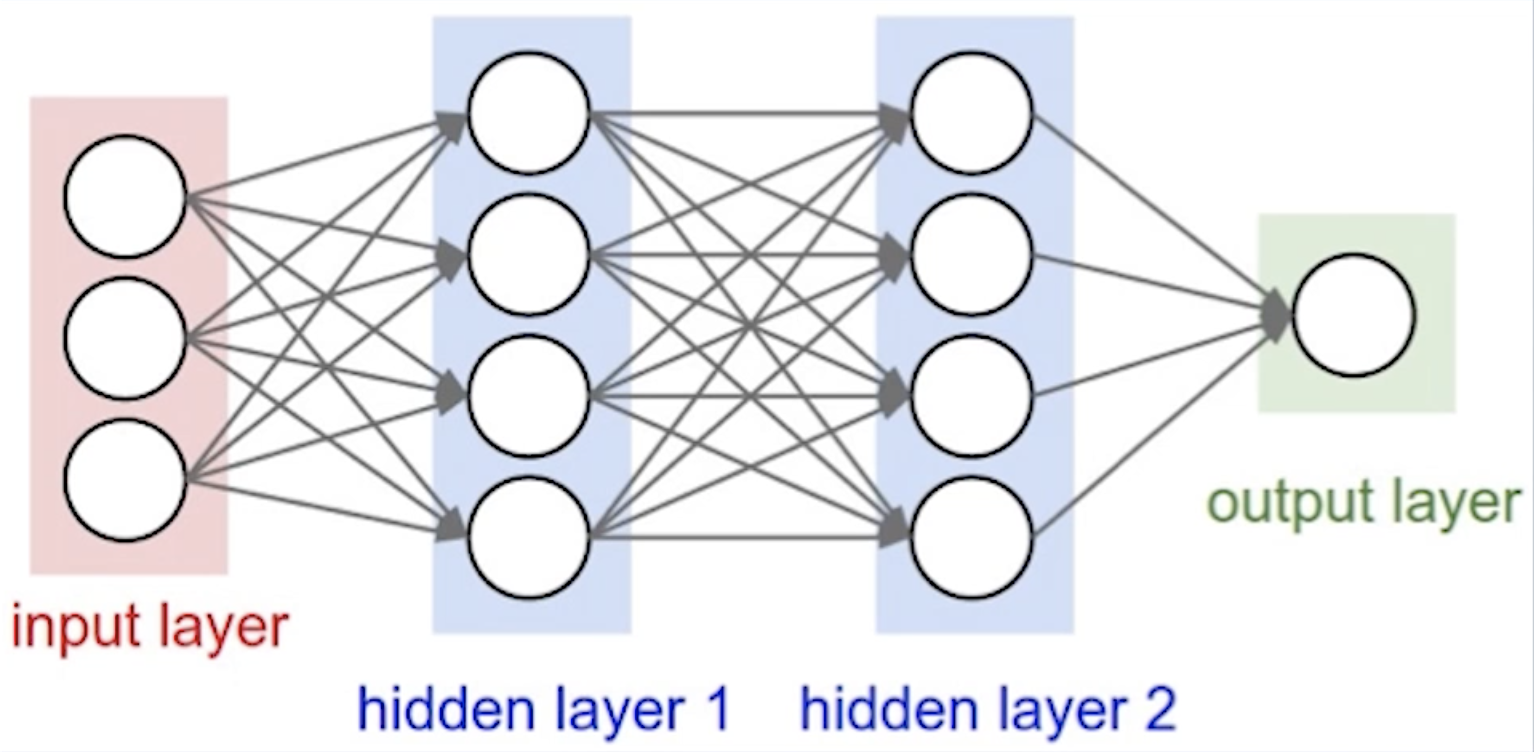

There are many different types of machine learning techniques, but the one that you’ll hear about over and over again are neural networks. So the goal of neural networks is to learn by itself, like a brain. It’s a highly interconnected system of neurons, or nodes, and it is dynamic so it processes input and updates its state as it responds to the input.

Neural networks come in all shapes and sizes. There is actually a “neural network zoo” where you can see all of the different topologies. Again you don’t have to worry about the low-level details and can just abstract it away, but if you want to get into it it’s pretty awesome.

So, how does this work? What does it look like from a high level? Gven a set of inputs on the left above, you pass the input into the neural network and it goes through a series of layers. As it’s passed through these layers, weights are applied on the nodes to produce an output, and each of these layers applies a transformation to the data. Over time, this creates a network topology and an activation function and other properties. These determine how the neural network operates. You eventually get an output, and you can compare the output of your neural network to what your expected output is to be able to improve your model in the future.

Training the Model

To improve our models we need training. When we’re training the neural network what we mean is that our neural network will cycle through many iterations of input in the data training set, and over time the neural network evolves and different synapses start to grow, which means that they’re more important and this corresponds to changing the weights of the equation of the neural network that will be used later. Once you have trained your model you have an equation that describes your model, and you’ll use that later to run inference on it. This is described as an ML model format that you can use in your app.

Having trained the model, when you have new data you run the model on the new data, and this is called inference. This gives you an output which is a prediction. So where is all of this done? Apple’s API offerings only allow inference on the device. You need to have a pre-trained model already to run inference.

Choosing a Pre-Trained Model

So then where do we get the model? Training a model requires a lot of money. If you want to do this on your own and create custom models, you’re going to incur a lot of server costs for this.

Apple provides four pre-trained models off the shelf. These models do a really good job of recognizing most everyday images and objects, so it will recognize a coffee versus a computer versus a phone versus a microphone. This works for a lot of use cases, so I would recommend starting there. There are four of them and they have different sizes for different use cases.

A second option is to use open source offerings. Keras is an open source tool for training that we’ll go through. They have several pre-trained models that you can start with.

Apple’s Machine Learning APIs

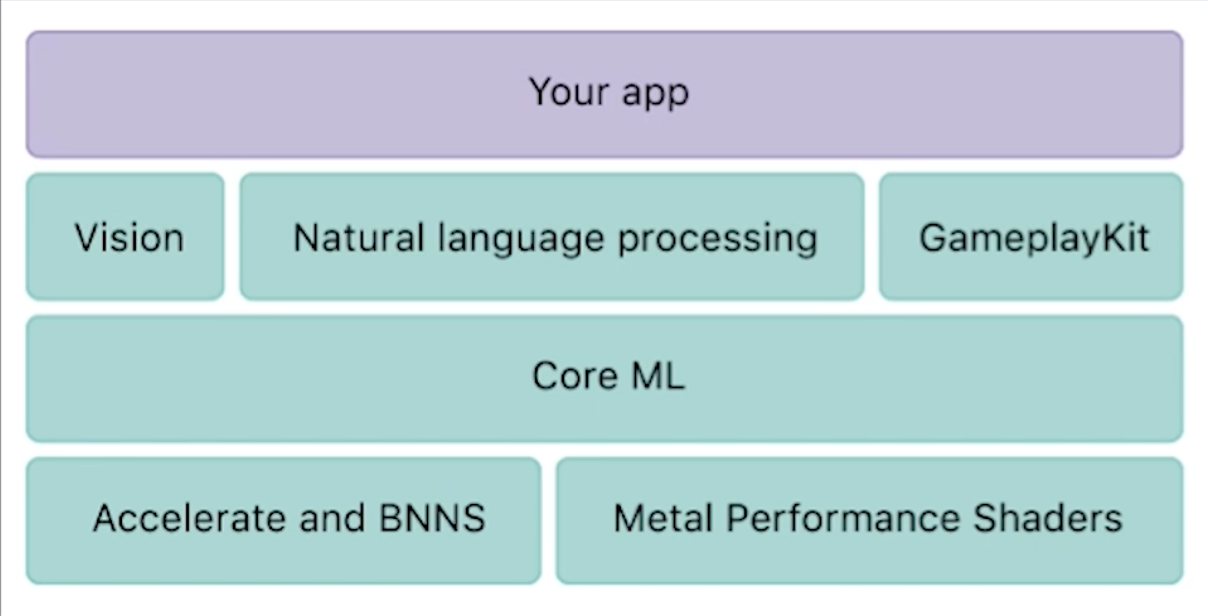

In iOS 11 Apple gave us a lot of gifts with regards to machine learning for iOS. At a high level you have your app, and then there are three different frameworks you can use that will help you with certain machine learning problems:

- Vision framework

- Natural language processing framework

- Gameplay kit framework

The Vision framework gives your computer a vision utility to be able to add standard computer vision-related features to your app. For example they have face tracking, face detection, text detection, shape detection, barcode detection, and more of those types of things.

Natural language processing can be used for extracting meaning out of any type of text, and this is included in foundation. Some examples of when you would use this would be language identification, parts of speech recognition, name recognition, or anything that takes some text, breaks it down, and tries to extract meaning.

GameplayKit is something I don’t know as much about, but it uses something called learn decision trees.

The next level down is Core ML. You can decide to use one of these three higher level APIs directly in your app, or you can use Core ML instead, where you’re integrating pre-trained ML models provided by Apple or imported from open source places, or your own custom models. Apple has provided us a really nice unified API for using any of these types of models in the ML model format, and you only need to write a few lines of code to do really cool things.

You can also go one level deeper, which is what you were able to do before iOS 11 and that is if you want to directly use Metal Performance Shaders, which is part of the metal framework that heavily utilizes the GPU. You can use those directly, but you’re going to have to roll a lot of stuff on your own, and it’s a lot more work – but you would have control and know it would go directly to the GPU. Core ML will optimize and decide whether to use the CPU or the GPU, so that’s abstracted away from you. You could also use Accelerate or BNNS.

Integrating Core ML

This is a CPU-centric API for utilizing ML. We’ll focus mostly on Core ML and how the workflow goes, and then how you would import the model and what types of things you could do with it.

There’s many different types of machine learning methods that they’re offering in Core ML that they’re going to do for you. The ones that they offer are mini neural networks, tree ensembles, support vector machines, and generalized linear models. They have covered a lot of ground with the amount of machine learning techniques that they’re offering us.

So what does this workflow look like? How do you actually use this model and improve your app?

Integrating Core ML is really simple. First, you’re going to import the ML model into Xcode by getting one of the four that are offered by Apple in their documentation. Xcode will generate a class that has that model name directly into Swift. One of the examples they use in WWDC was this MarsHabitatPricer() ML model, which is basically a prediction model about pricing if you were going to live on Mars.

You would initialize that model just with MarsHabitatPricer() – it’s as simple as that. Then you pass input into that model and you make a prediction. In order to use the model to make predictions, you just use the model that you created and call prediction on it with the inputs that you have.

Then you ship it! (after you build it and run it and test it, and make sure that it’s actually working…)

Third-Party Models

But what if you want to customize a machine learning model? When would you want to build a custom model? When you look at the Apple models they give a description of what they can be used for. When one of those doesn’t really add any value to your app then it’s probably time to look into a custom model.

One of the first options is Places205-GoogleNet image data set model. This detects a scene of images with 205 categories so it can detect if you’re at an airport, for example. It gives you the high level description that you’re at an airport instead of picking out the plane that might be in the foreground of the image.

There are also three different data models: ResNet50, Inception v3, VGG16, that deal with detecting the dominant object in a photo so it would obscure away the background and there are around 1,000 categories. Each of these covers a certain amount of categories, and they each have a different size. So that’s something that you need to consider because these are quite large. While the VGG16 model has been widely adopted in machine learning across disciplines, it’s not really that practical to be including this in your app, because the machine learning model will be loaded in right when you build, and can mean quite a bit of memory is using up.

Open Source Models

I would recommend going to the Keras GitHub page for them. Apple came up with a Core ML tools Python package. Most of the machine learning community is centered around Python, and while a lot of us probably aren’t Python developers, there’s a lot of documentation and a lot of resources around just doing what you need to do in Python in order to use it in your app. I don’t think this should be seen as a barrier – we should embrace that there’s already a community out there that’s done a lot for us. In order to use this you have to have a Python environment set up, so I’ll go a little bit through that. Essentially you run pip install-U coremltools. coremltools is what was provided by Apple.

pip is the package manager for Python, so it’s similar to installing a cocoapod. Next you convert your model from Caffe or Keras or another tool into a Core ML model and save that model. In the Apple documentation they tell you which formats you’re able to convert from, but the ones that are really popular are Keras and Caffe.

Keras

I’m going to briefly go through how you would approach making a custom model using Keras, so you can get started and you don’t feel like this is something that’s out of reach. Keras is a Python library that’s used to develop and evaluate deep learning models. This is exactly what you would need if you’re trying to create a model that has a more specific use case than what’s already provided.

For example, if you wanted to use one of the Apple-provided models, it might be able to tell a car from a bicycle but it wouldn’t be able to tell a specific type of bicycle from another: a fixed-gear bicycle versus a road bike versus a mountain bike versus a hybrid bike. In that case you would need to have to have your own data set and label these images with what type of bike it is and then create your own model to do that. Keras is backed by an efficient numerical computation library, which is basically an engine for being able to create the model.

The common ones that it supports are TensorFlow and Theano. I’ve been using TensorFlow in some side projects because it’s very well documented, and Google does a really good job of supporting it.

But… Python? 🐍😑

I already went through this a bit, but I think that learning the basics of Python or any new language really opens your eyes up to the world of programming. It’s a really good way to see similarities to a language that you’re already using, for example Swift, and it’s also good to see the types of features that it offers that are different from Swift.

It’s a good exercise in learning more about programming language theory, and what’s practical for what use case. Python is really beginner-friendly. A lot of universities offer this as the first language that they teach, like MIT and Stanford, as well as open courseware-type classes that are online, so it’s a common language to be used in a lot of these learning tools. I would recommend looking at the Udacity course for Python if you are really unfamiliar with it, because it’s really quick and to the point and it doesn’t go through a lot of the boring programming exercises that you would normally see in an intro language course.

To set up your Python environment, there’s a really easy way to do this using Anaconda. Anaconda is an open source data science platform that manages your Python packages, dependencies and environments so that you don’t have to do it yourself. You can download it and decide to use Python 2.7 or 3.6. There’s a very big difference between Python 3.0 and above and pre-Python 3.0, so whatever you decide to use it with, just choose that version accordingly.

Keras Models

A Keras model is defined as a sequence of layers, just like the earlier high level overview of a neural network. You going to be creating these layers in code. We’re going to create a sequential model and add layers one at a time until we’re happy with our network topology. The first thing to do is you have to make sure that you’re getting the number of inputs correct. Because we won’t go into too much detail in this talk, check out this tutorial for building your first neural network using Keras with Python.

We’ll show briefly how little investment it is in order to be able to do this. Compared to an iOS app code base, there’s very little code involved:

- Load the data

- Define the model using sequential layers

- Compile the model

- Fit the model, which just means execute the model on some data to train it.

- Evaluate the model – run a data set through it and see whether or not your actual output matches your expected output.

And then you’re done – it’s only 31 lines of code to be able to do this, so it’s very approachable. Once you have that custom model you’re going to use the Core ML tools package that Apple developed, which will generate an ML model for you, and then you can use it in your app.

Test & Instrument

I think that it’s really wise to test your app as always, and then instrument your app. Considering that these models can be fairly sizeable compared to the rest of the assets in your app, you should use the instrument tools to make sure that you’re not causing humongous memory spikes or any other instrumentation factor that might negatively affect the user experience. It’s important to be doing this when you’re using machine learning libraries because it’s something new and something that is a lot more memory than you usually would use.

Thoughts on the Future of Machine Learning

I’ve been following this space for maybe the last year, and I’ve been watching what’s been happening in other communities like in Android and the developer community as a whole. What do we think’s going to happen? Apple continues to invest heavily in ML – we’ve seen this in the WWDC 2017 updates and they have hired a lot of machine learning experts over the past couple of years, and it’s finally getting brought into the developer tools, as well as the apps that are provided by us. It’s going to be interesting to see how developers utilize these new APIs, because this could severely improve the user experience for a lot of apps and open up a whole realm of possibilities for improving apps using machine learning.

II hope that there will be improved machine learning resources. To put the machine learning field in perspective, in 2009 at MIT it was deemed not something that was super practical to be using. So much of machine learning has evolved just since then, within the last decade, and a lot of resources have only come about since then. I wouldn’t recommend starting with a linear algebra textbook because that might just be unnecessary and scare you away. Hopefully there will be more improved resources that are tailored toward iOS developers.

I think this will come from both Apple and the community. Apple announced that it’s making its own GPUs a couple of months ago, so I think that will really help us out in what’s offered on the device – both mobile devices as well as laptops and other Apple products. I think that it’s possible that they’re going to put an optimized machine learning chip on the device. This is something that is being talked about across other communities, and I follow a lot of podcasts that think this is something that seems to be natural and this would make it a lot easier to make use of machine learning libraries without negative performance effects.

Additional Resources

This is going to be a space that’s evolving pretty quickly, so if you’re interested in following some blogs and newsletters and podcasts, I’m actually starting one called Let’s Infer. I’m going to put the first newsletter out after WWDC in a couple of days, and then a companion podcast.

ML News:

- This Week in Machine Learning and AI

- Keras Blog

- machinethink.net/blog

- alexsosn.github.io/ml/2015/11/05/iOS-ML.html

Courses:

- Udacity’s Intro to Machine Learning: uses Python and is free!

- Udacity’s Intro to Data Science Nanodegree

- Coursera’s Machine Learning at Stanford

I’m also making a bike app that uses machine learning, which is why I was using the bike example. It’s going to help users figure out what type of bike to buy, since a first-time buyer usually doesn’t have the vocabulary to describe, for example, a fixed gear bike with bullhorn handle bars and a steel frame. Instead the app provides you recommendations, and then you can buy it in the app.

Q&A

Audience Member: I was wondering if you had any suggestions on how to apply machine learning to music, specifically anything to detect beats or patterns, or common algorithms or formulas? When I studied back in college, as far as analyzing the signal, things that were helpful were fast foray transforms and stuff like that. Is there equivalence to that kind of stuff in machine learning?

Meghan: Absolutely. That’s one of the biggest use cases of machine learning, and while I don’t work on the machine learning team at SoundCloud, I go to all of their book clubs, where they go through some topics and how they’re using it at SoundCloud and so there’s a lot of literature out there. I think on our SoundCloud blog we have some and Spotify has quite a lot, too, about how to build a music recommendations engine. A large part of it is being able to tag the songs with specific vectors, or specific labels, and then you choose however many labels you want. So maybe you want to put that it’s a hip hop song from the ’90s and it’s by this artist and it has this and this factor, it has this musical range. You can think of an infinite number of labels and then you would use that in whatever model that you’re creating. In my newsletter I guess I can put in one for that. It’s a little bit more involved and I know that Apple is providing some sort of music tagging setup too in what they released. That’s a very good use case of machine learning.

Audience Member: I think you said that either training or creating a model would be expensive in terms of time and money. Did you mean to say that even your bike example would be expensive to train?

Meghan: It depends. The sky is the limit on what you want to do in machine learning, and if you decide that you want to label your data with so many labels so that your data set is huge, and you want a lot of different images, for example, then training that model is going to become more and more expensive the more labels you have in it and the more images that you use.

If it’s so big then you can’t really do it on your local device. You’d have to be doing it on servers, and you would probably have to pay the server costs. If you want to use a machine learning as a service type of provider, you also have to pay for that after their free trial.

It can be expensive because it just adds up, and if you’re an individual developer any of these costs can add up. With my bike workshop app, the data set I’m coming up with myself is not that big. I can actually run it on my local device, on my laptop, and it doesn’t take that long – on the order of 15 minutes or so with what I have so far. The model isn’t finished yet though, so it might grow. I wouldn’t say it’s something that’s really expensive, but I’m saying that at scale it’s a quite expensive problem, so you can save money by doing some of this stuff on the device.

Audience Member: I’m just curious what approach you’d suggest if you can’t target iOS 11 and hence use ML kit?

Meghan: If you can’t use iOS 11 then you obviously can’t utilize the Core ML libraries that they’ve put out but there are certainly ways to do this before iOS 11. You can use the Metal Performance Shaders convolutional neural net, NPSCNN, or Accelerate basic neural network subroutines. The tooling is not very good for it but it’s certainly possible.

Audience Member: Have you seen any examples of local training on device in your experience?

Meghan: I have not seen it with my own eyes. I know that you can do it. I know that Apple doesn’t provide ways of doing it for us, but I also know that Apple does it internally because they’ve mentioned it. For example, in the Photos app they are doing it on the device, and it will be really useful if we can also eventually do that. It’s not really something that’s that practical for us to do now because it’s very computationally expensive, but it hopefully will be cheaper and more practical in the future. I know there are ways to do it now though.

About the content

This talk was delivered live in June 2017 at AltConf. The video was recorded, produced, and transcribed by Realm, and is published here with the permission of the conference organizers.